People who work and operate in areas that are close to new technological innovations and solutions are already used to dealing with change and have learned to react quickly. The world of Kubernetes and cloud-native technologies is a prime example of a highly dynamic area. However, in the current situation around the corona pandemic, the everyday challenges of new technologies seem to take a back seat. After all, it is, first of, all a question of securing the basics for the continued existence of the company and its digital infrastructure.

This is where cloud-native technologies are in place in many places and are potentially doing their part for fast, fail-safe and flexible IT. In addition, there is also a time after the great chaos that may come after the first shockwave.

What will happen then with the innovations of IT and digitalization? Are they even “on hold” through Corona and co. or is their time coming right now?

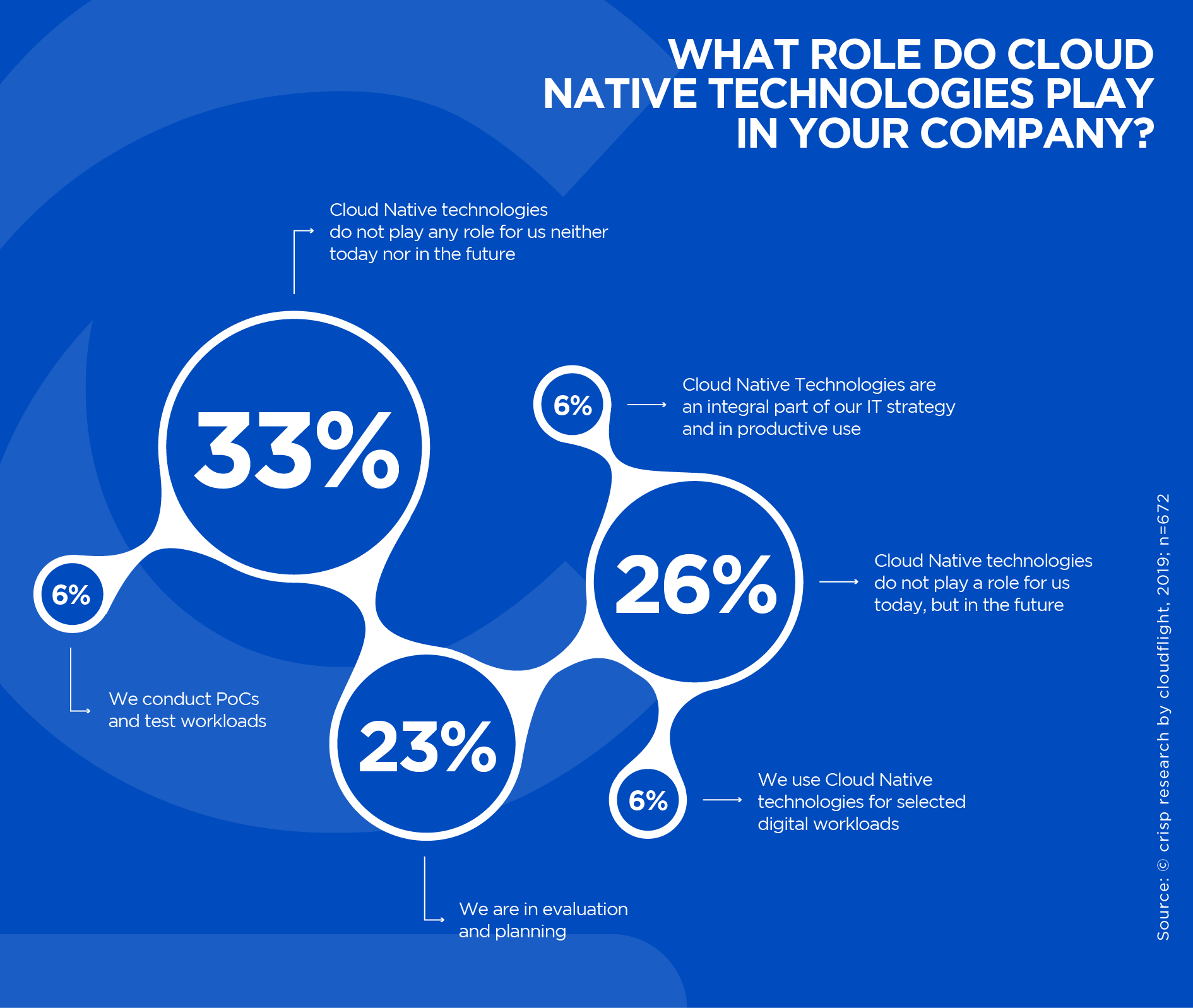

In spring Cloudflight and its research brand Crisp Research will publish the first study on the status of cloud natives in Germany. Exclusive results confirm that two-thirds of the companies are not only planning to use Kubernetes but also other technologies from the cloud-native landscape, especially open source solutions for operating cloud and non-cloud infrastructures according to the latest standards. 2020 will be the year for Cloud Native – in spite of all odds!

Cloud Native & Kubernetes Trends 2020

- Kubernetes Prod 2.0: The experience with Kubernetes in productive operation was very positive for many companies last year. The setup and operation, especially agile environments and application concepts with Kubernetes and cloud-native tools, have brought the desired success in terms of agility, flexibility, performance and costs. Now many companies are already taking the next step in Kubernetes operations, optimizing their clusters and trimming workloads to the most efficient level possible. The scaling of workloads within a Kubernetes cluster to meet changing requirements (more load / less load) will be important to ensure agile and innovative platform operation even in particularly cost-sensitive areas or to provide services with the accustomed performance.

- Kubernetes-native software boom: In the early stages of Kubernetes, the software that was to be run within the containers already existed in most cases – at least its functionality and architecture were fixed. However, to fully exploit the advantages of Kubernetes and to better support modern operating models, adaptations are necessary. To a certain extent, Kubernetes has moved into focus as an operation and virtualization layer. At this stage, Kubernetes has already reached the point where companies and developers can develop their applications directly on Kubernetes and container clusters and tailor them specifically for this purpose. Thus, Kubernetes Native Software will become more and more important in the coming months and help to define the design of modern applications.

- Kubernetes on the Edge: For a long time now, Kubernetes has no longer been made for the large public-cloud infrastructures only. For a long time now, users have been experimenting with the use of Kubernetes in on-premise environments, sometimes with specific stacks and operational requirements. With the hype and the increasing importance of edge computing, especially for embedded systems and IoT architectures, Kubernetes is also moving towards the edge. KubeEdge is currently an exciting project that will enable the management and deployment capabilities of Kubernetes and Docker to be used successfully in this environment and packaged applications to be run on the devices or at the edge.

- Kubernetes goes Machine Learning: In addition to IoT and edge computing, machine learning as a megatrend is also on the agenda of decision-makers and the cloud-native community. Data-based business models are slowly beginning to pay off and companies are identifying increasingly successful use cases for using and monetizing data from their own value chains and ecosystems. Kubeflow is the appropriate project for this. The developers behind it pursue the goal of optimizing deployments of machine learning workflows in Kubernetes. Thus, the portability and scalability of machine learning platforms shall be increased.

- Cloud Native in the data center: Kubernetes and cloud-native technologies are per se developed for use in and for the public cloud. However, solutions for the management of application and container clusters and infrastructure management were also used within on-premise architectures at an early stage. In the great wave of M&A, virtualization specialist VMware snatched up the container start-up Heptio. A few months later, a completely restructured Kubernetes-based platform for the vSphere suite in version 7 is now coming into general availability for customers. This lays the foundation for the importance of Kubernetes outside the cloud and in the data centers and will rapidly spread throughout the company.

- Kubernetes as a new port for packaged software: With Kubernetes, many companies and software providers have discovered a way to operate existing standard software on a container basis. By decomposing the application, new possibilities of use can be created. ISVs now also offer many of their standard solutions directly on containers and Kubernetes. This also includes existing middleware products, such as those offered by Software AG on Amazon Web Services. This trend will intensify this year, making Kubernetes a factor not only for custom build software but also for packaged apps and middleware.

- The secret winner, OpenShift: On-premise and multi-cloud environments around Kubernetes Red Hat put into the focus of its product development at an early stage. With OpenShift, a Kubernetes distribution is on the market that can provide even more services as a platform-as-a-service than the actual Kubernetes framework. Developers have been careful to combine the advantages of open source solutions, the combination of leading tools from the cloud-native world and the experience of Red Hat’s history to make the OpenShift platform available for any infrastructure environment, i.e. any public cloud, private cloud or on-premise environment, and a combination of these. As a result, more and more companies and developers are successfully relying on OpenShift to build, operate and evolve containerized applications.

- Hire or Buy or Automate: The skills needed to master cloud-native environments and run Kubernetes are still extremely scarce. At the latest when the landscape of cloud-native technologies has taken on a critical importance for digital and software strategies, companies must also be able to maintain them continuously. Very few companies can already call this skill set their own or build it up in a short time. Therefore, the only options left for them are to hire new employees with these skills, to take help from service providers who are responsible for this area or to set up an automated environment together with their own IT, service providers and available tools, which can take over the necessary tasks to a significant extent. All options have their advantages and disadvantages in terms of cost, quality and sustainability. In the end, the question remains with what intensity and criticality the cloud-native technologies should be established in the company.

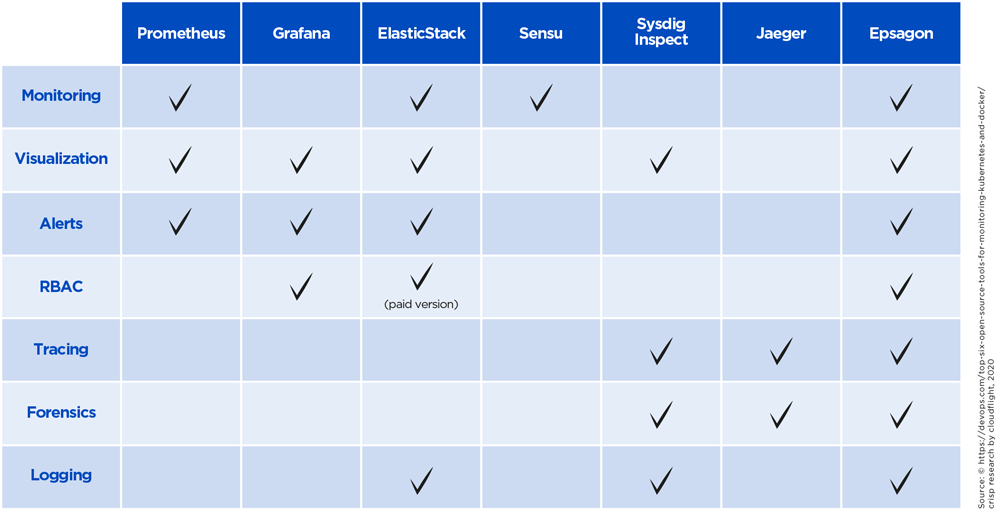

- Moving Beyond Kubernetes: Kubernetes is and remains the shooting star of the cloud-native world around which all projects and offers are built. However, a world of technologies is also developing alongside Kubernetes which is becoming increasingly important in practice and is increasingly commoditizing Kubernetes itself. This means that Kubernetes and, above all, its distribution will continue to be important in the long term. In the future, however, the tools around them will make a difference. Once Kubernetes is available everywhere in the company, even if in different flavours, the right tools for the development, management, monitoring and optimization of the infrastructure will be needed. Whether tracing, service mesh or visualization of the platforms – any service can make the difference and improve the go-to-market cycles of the applications, reduce operational costs or increase the performance of the platform. Therefore, the choice of technologies of the Kubernetes ecosystem is becoming more and more a success factor.

- The end of Docker’s dominance: If Kubernetes has mastered the container orchestration frameworks, Docker was equally valid for the leading container technology itself. Yet this dominance now seems to be finally questioned. On the one hand, there are immediate alternatives, on the other hand, there are other ways to run applications in containers and manage pods (container groups). Technologies such as containerd, CRI-O or knative are being brought onto the scene, some of which work with considerably less coding effort or in server-less operation. Together with the somewhat unclear future due to the takeover of Docker by Mirantis, the variety of container tools will probably increase before a new wave of consolidation sets in.

- Microservices with Service Mesh: Microservices architectures are slowly but surely defining the business-critical digital applications of companies. Existing monoliths have been partially rebuilt and redeveloped and new digital platforms are split into small-scale services anyway. For automated communication and management, service mesh tools such as Linkerd or Istio have excelled, sometimes even as a graduated project of the Cloud Native Computing Foundation (CNCF), which expresses the high degree of maturity of technology in the CNCF universe. It is these tools that will be particularly important for companies and their digital platforms. Therefore, it is worthwhile to take a look at the use and functionality of the Service Mesh tools.

- Tracing Boom: In addition to service meshes, (distributed) tracing is becoming increasingly important for microservices architectures. In distributed systems tools like Jaeger (graduated within CNCF end of 2019) can help to monitor network transactions, perform root cause analysis or optimize network performance and latency. This is becoming increasingly important in growing platform architectures with more and more dependencies among microservices. The OpenTelemetry Project builds on Jaeger and combines it with other services such as Prometheus to provide a set of APIs and libraries for monitoring cloud-native systems.

- Most Wanted: Standards: The flood of projects and services currently offered in the cloud-native environment also reveals a growing problem. Companies and developers must be able to orchestrate not only their microservices landscapes but also numerous management tools. Countless different projects with different purposes ensure that only a few real standards exist. The focus on leading tools, service aggregation and common standards are becoming increasingly important to keep track of things and ensure the long-term growth of the architecture. This is where CNCF, the representatives of leading projects but also of the users are called upon to agree on these standards.

- Proprietary Services vs. Open Source Stacks: Cloud Native and Open Source technologies have already become an integral part of the large cloud providers. However, they are still (rightly) interested in developing and commercialising their own services. Whether analytics services, database services or the management tools on the cloud platforms or tools and frameworks for hybrid cloud and data center management – all have a specific purpose and are specifically tailored to the provider stack. Currently, many of the tools, e.g. a DynamoDB or Simple Queue Service (SQS) from AWS, are still leading or open-source alternatives are clearly superior. Nevertheless, users may legitimately ask themselves in the future whether an open-source stack can meet their requirements equally well and thus promise more flexibility. This will be a key question that will be asked in the architecture evaluation 2020.

- Kubernetes Service Providers – ready to rule them all: When companies want to develop new digital platforms based on cloud-native technologies and architectures, it is almost impossible to avoid a provider that specializes in exactly that. The planning, construction, development and operation of containerized applications and their integration with the entire IT system will only work if the people involved are familiar with the technologies, have the goals in mind and know how to achieve them. Therefore, these service providers will be in particular demand this year in order to be able to guarantee efficiency increases of the existing systems or the realization of new digital platforms at all. Taking over such projects autodidactically and under their own direction could end up being negligent and costly for the companies.

Corona vs. Cloud Native

Uncertainty and crisis management are plaguing companies. New innovation projects are initially refrained from in the first instance. Companies must first get their contingency plans under control. There it will be necessary to provide a particularly stable and fail-safe IT. Here, however, cloud-native tools can help to ensure the monitoring and performance of the systems. This requires speed. In addition, for many companies, corona times are all about elasticity. Some companies almost completely shut down their operations, while others, such as in the medical sector, in particular, multiply their efforts and thus the need for IT infrastructure. Here, container and cloud-native technologies can already spur business survival strategies.

In addition, there will also be a period after “Corona” in which digital business concepts and platforms will again or very particularly be important in order to ensure the growth of companies even after difficult times. Even if Corona will remain a constant companion in our world, the current examples sometimes clearly show where the homework in terms of digitization is at companies – whether in the direction of partners, employees or customers. Those who can already use the transition period to set the course here, however, can emerge as winners.

Read more recommendations for decision-makers, as well as additional background information from the world of Kubernetes and the Cloud Native Computing Foundation in our report, which you can download free of charge.