Currently, conversations about artificial intelligence (AI) are everywhere. Scrolling through your LinkedIn feed, you will find numerous posts related to the great potential of AI – in every possible business sector. Since the rise of ChatGPT, AI has been discussed extensively in media and politics. Even in private gatherings, you have a compelling small-talk topic at hand. Hopes and fears emerge simultaneously, fed by the impression that AI is capable of solving almost every task, perhaps even more efficiently than we can. One could get the impression that intelligent solutions are well on their way to fundamentally reshaping all major industries.

So, how come according to the IBM Global AI Adoption Index 2022 only 35% of the participating companies stated that they are adopting artificial intelligence?

The main barriers stated are limited AI expertise or knowledge, high prices, and a lack of tools and platforms to develop AI models. Besides those restricting resources, another crucial factor identified is trustworthiness. Four out of five companies reported that the ability to explain how their AI arrived at decisions is important to their business. [IBM Global AI Adoption Index 2022]

At Cloudflight, we provide access to relevant resources and knowledge. Moreover, we focus on explainability and trust to develop a personalized solution to ensure user acceptance.

Designing AI systems for trust

The success or failure of an AI product is not solely defined by its performance – if our target users do not see a benefit in applying it or do not think it is trustworthy, there is an increased risk of sticking to legacy solutions. Not only does this lead to misspent development costs and effort, it also may slow down the digital transformation of your company. Luckily, there are effective approaches to counteract these risks.

Explainable AI Approaches

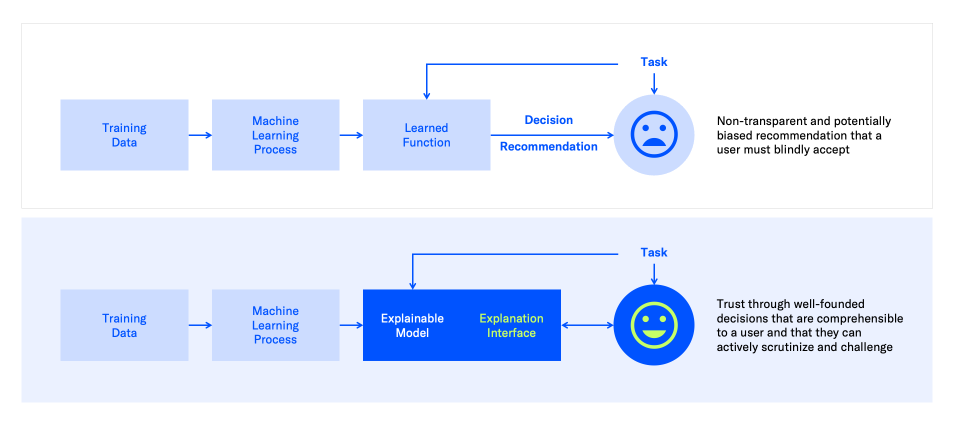

One possible technique is the application of explainable AI (XAI). Depending on the complexity, machine learning (ML) models can become ‘black boxes’. This means, there is an input and there is an output, however, the process of how the model modifies the input to reach the output is difficult or impossible to understand. XAI aims to provide clear explanations for the decisions or predictions made by ML models, allowing users to understand the underlying rationale and demonstrate patterns of how input, e.g. different features, affect variations in the output.

Explainable artificial intelligence guarantees transparency and security

Applying XAI is crucial in many projects, however, depending on the type of application and the level of AI knowledge a user possesses, demonstrating the functionality and reasoning of a complex model might lead to over-information. It is more effective to display relevancy and present the (unexplained) result in an interpretable and easily graspable way. Furthermore, state-of-the-art concepts like generative Large Language Models (LLMs), such as ChatGPT, are just too huge to apply established XAI approaches. In most of the cases generative models are applied, it is yet more important to guarantee ethical correctness and verify statements and claims.

Trust but verify: AI regulatory compliance

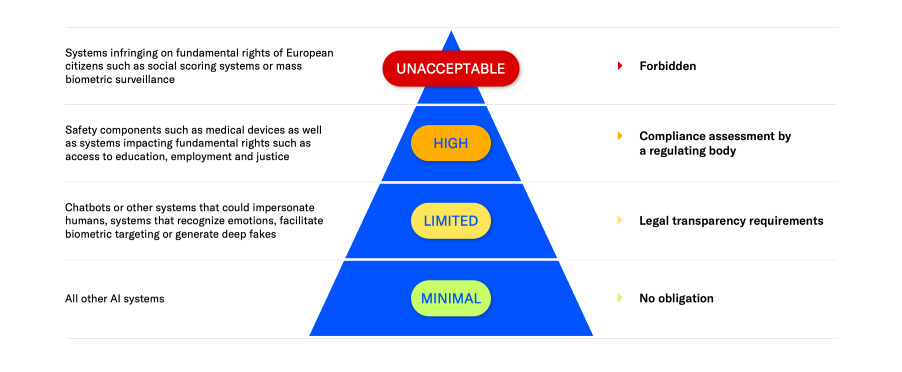

To illustrate the importance of tailored solutions for individual applications let’s take a look at the risk classification for AI applications according to the European Union’s proposed AI Act.

By ensuring the protection of individual rights and freedoms and establishing verifiable requirements for AI systems, the AI Act offers a chance to build trust and acceptance for AI solutions across different industries.

Explainable artificial intelligence guarantees transparency and security

Unacceptable Risk

AI systems that violate fundamental rights and therefore constitute a threat to the citizens of the European Union are forbidden. They include systems attempting cognitive behavioral manipulation —especially of vulnerable populations, social scoring applications, and many real-time and remote biometric identification systems. There are strictly prescribed exceptions to this ban in the interest of national security or the prosecution of serious crimes.

High Risk

This category covers AI applications that may have adverse impacts or risks if not properly managed. Examples include AI systems used in healthcare, recruitment, education, or law enforcement. High-risk AI systems need to comply with specific requirements, such as transparent documentation, clear user instructions, and human oversight to minimize risks and ensure safety.

Thus, it has a high priority that results are comprehensible and the correlation between input and output is clear.

For image classification tasks established XAI techniques such as image highlighting using heat maps in combination with reference samples can successfully be used. For the latter example, the goal is to relate input features, which are most likely demographic and biographic user data, to the final prediction. For example, it’s acceptable that longer employment periods could lead to positive recommendations. However, we must ensure that demographic aspects like gender, age, or nationality (and the social biases inherent therein) do not unduly influence an AI system’s recommendations.

Before their launch, high-risk AI systems’ adherence to the safety requirements is assessed and certified. Additional assessments also have to be carried out over the course of the system’s life. Companies bringing these systems to market therefore must invest in robust Machine Learning Operations (MLOps) and compliance procedures.

Limited Risk

This category includes AI applications with less potential for harm or where the risks are more manageable. These systems typically have a lower impact on individuals’ rights and safety. Although there are fewer regulatory requirements compared to the higher-risk levels, these AI systems still need to adhere to transparency, user information, and traceability obligations.

Representatives for such applications are chatbots in various forms, e.g. automated customer support or information extraction solutions, such as question-answering systems. These tasks are solved by LLMs, therefore, as mentioned above, classic XAI methods are not feasible. Though there are concepts to visualize the attention of used transformer models, verifying generated text in terms of fact-checked, non-discriminating claims and answers lead to a better user experience. A step-by-step verification process can help ensure this: Classification or iterative prompting can be used to ensure non-discriminating text output, followed by combinative techniques to confirm statements and claims using Google Search API for example.

Especially with regard to LLMs, the EU envisages an obligation to register the model, mark its output, and ensure that the training data can be traced to protect intellectual property rights.

Minimal Risk

This category encompasses AI systems that pose minimal risks or fall outside the scope of higher-risk applications. Examples may include basic tools like search engines or spam filters. The regulatory burden for these systems is minimal, aiming to encourage innovation and avoid unnecessary bureaucracy.

Finally, besides elaborating personalized concepts and their development it is eminent to keep the user in the loop and implement periodic evaluation phases. Thus it can be ensured that identified concepts do apply as expected to your user groups.

How XAI paves the way for trustworthy tech

Trust is a fundamental prerequisite for the successful launch of AI systems. Explainable AI (XAI) methodologies can help to increase acceptance of AI-driven recommendations. In addition, regulatory frameworks such as the proposed AI ACT by the European Union establish clear and verifiable requirements for the deployment of AI systems. Regulatory compliance is therefore rapidly becoming a prerequisite for market entry. As a result, companies that leverage XAI solutions as well as robust machine learning operations workflows will have a significant competitive advantage.

Do you want to know more about how to apply explainability to your AI products or do you have concerns about getting started with AI due to user acceptance? Get in touch, our experts are happy to come up with a custom solution for your business!