Introduction

At the recent World Government Summit in Dubai, NVIDIA CEO Jensen Huang made the following statement: “It is our job to create computing technology such that nobody has to program. And that the programming language is human”.

We have put this to the test: A team of 70 Cloudflight engineers from 5 countries tested GitHub Copilot for one month throughout ~ 10 customer projects – huge thanks to them, to Microsoft and GitHub for providing invaluable insights and support, and to our involved customers who trust us with their software.

Additionally, we were able to challenge our own insights against the performance of 1040 participants in our very own Cloudflight Coding Contest on 19.04.2024: 299 of these reported not wanting any AI assistance, while 741 were open to its use. Disclaimer: The outcomes of our GitHub Copilot trial are based on subjective answers by our participating developers and architects. We decided against a long-term and more controlled study due to the following factors:

- AI assistants update frequently

- No project and no sprint are really the same

- Team compositions change

- Measuring significant impact on metrics such as story points per sprint or deployments to production per certain timeframe would require at least 3 – 6 months across multiple teams to have any real meaning

Our CCC results used for verification of the GitHub Copilot Trial assumptions are based on results by our participants during the coding contest.

Impact on productivity

We consider the outcomes of GitHub Copilot as being positive. If you go in with realistic expectations and with know-how about where and how the tool will most likely help you, we expect you to turn the use of GitHub Copilot into a net positive outcome – just like we did.

After one month our test participants did see GitHub Copilot as a valuable tool in their tool stack: They estimated saving around 40 minutes per day on average, which would equate to around 8% for a typical 8-hour workday. This sums up to around one and a half days each month. Looking at the overall range reveals two extremes: 10 of 70 participants estimated saving as much as one hour, got caught playing too much with AI and ended up losing around 1 hour of productive work on some days. We will review what we think are strengths and weaknesses of AI Assistants in more detail below.

Comparison with status quo

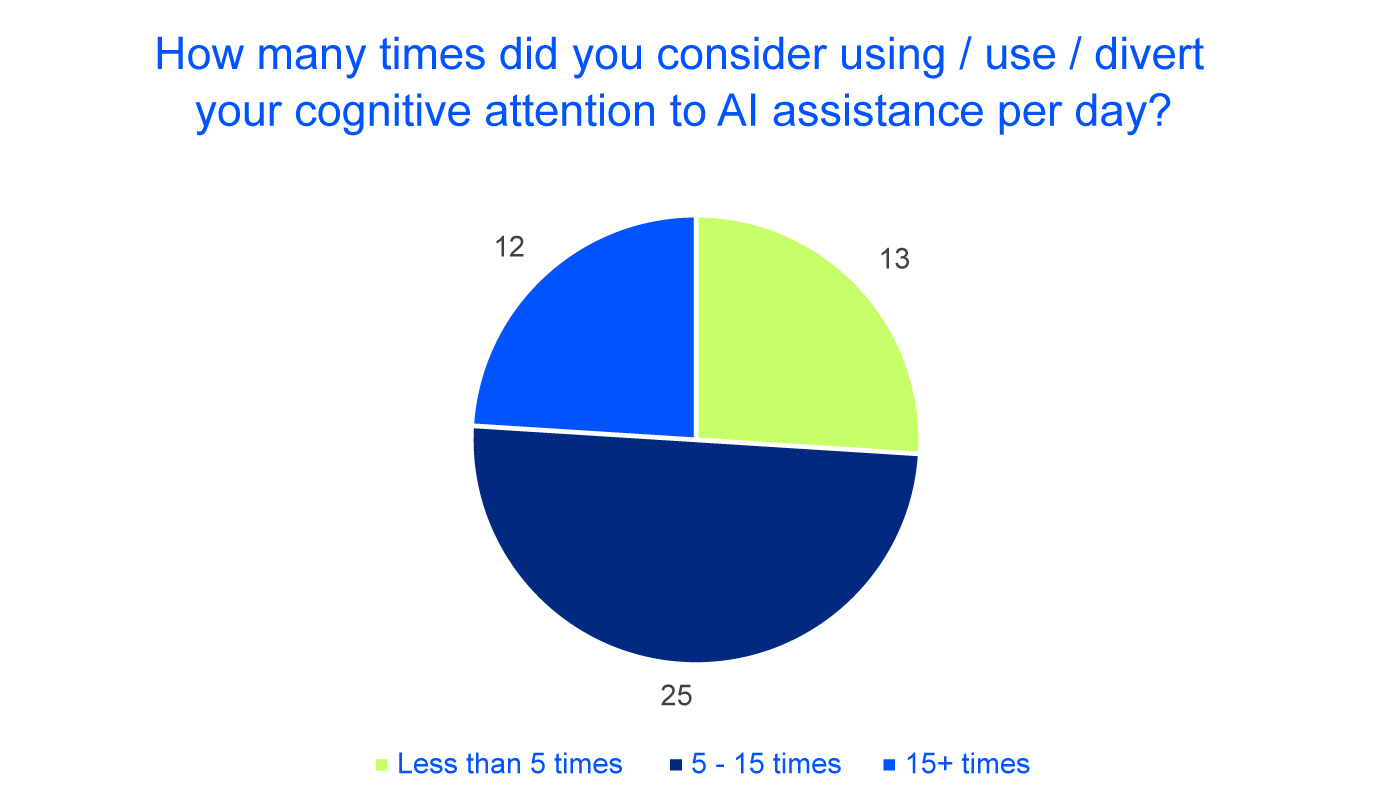

We also were interested in how often our colleagues interact with the AI, with the following outcomes:

An educated Cloudflight guess of how often the average developer consults documentation (3 – 5 times / day) and searches the web (5 – 10 times / day) reveals similar usage to our AI assistance access patterns. We can imagine searching the web in the traditional sense being replaced by interacting with AI in many cases. Frequence of misprediction, quoting of source material used to manually verify answers (like e.g. Phind and Kagi do) and having the most up to date documentation available will play a large role in winning the race here.

Further insights

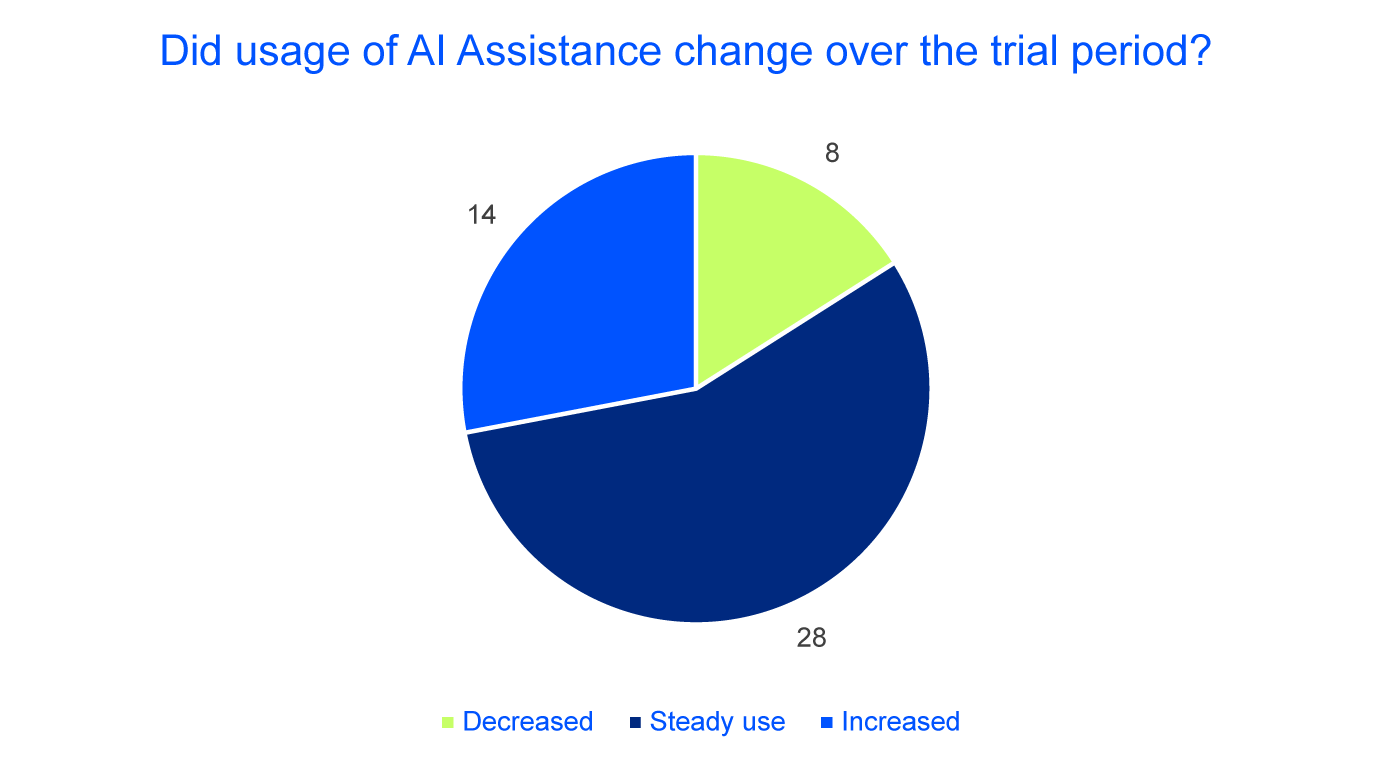

Usage patterns show that our participants kept engaging with AI regularly, not highlighting any concerning trends, with only 8 out of 50 answers reducing their use of AI during the trial period.

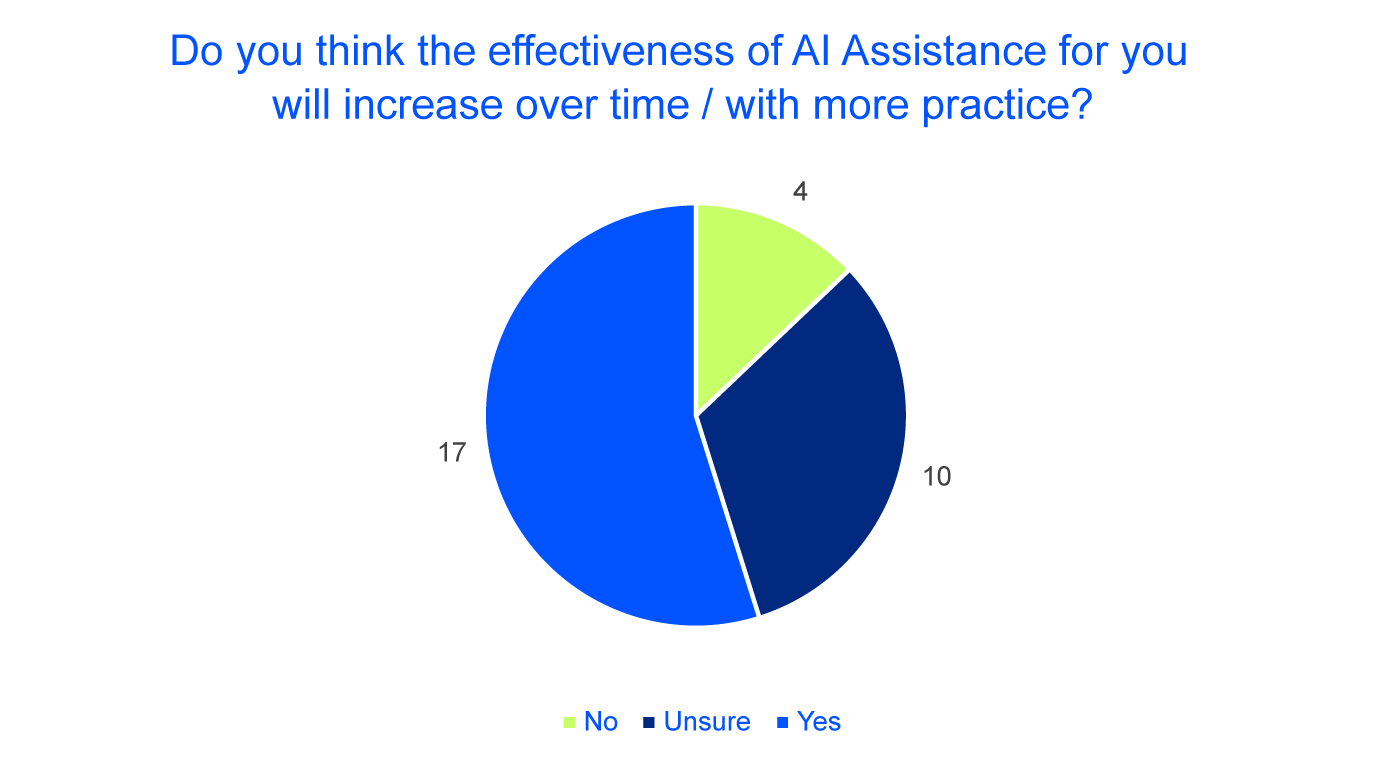

More than half of participants also expect usefulness of AI tooling to increase as they get more familiar with it:

Strengths and Weaknesses

Overall, we found that GitHub Copilot helped us in seven distinct areas, we had mixed feelings in two other areas, while we found it lacking in three areas.

Everyday Companion

Generation of Documentation

Our AI assistants being language models, it should not be a surprise that they are considered helpful in these areas. Paired with the fact that quite some engineers perceivably dislike writing documentation and that documentation tends to get outdated easily and should be frequently updated, this is a major win scenario. While reviewing the outcomes is a must with about every LLM-generated product, the generated texts and ability to provide documentation on multiple levels of abstraction blazingly fast outweigh that manual time investment.

Generation of Tests

Both scaffolding of tests and creation of additional testcases are quite repetitive tasks where GitHub Copilot will help you greatly. By including eventual test setup classes and other already existing test classes in your LLM context, you will achieve a certain level of consistency across test classes. Beware: You cannot rely on the LLM catching all reasonable test cases for you, or generating perfect test data. Some parts will need to be adapted or crafted by hand and always thought through thoroughly.

Generation of database schemas

Given your data model or entity classes, and the target database and database versioning technology of your choice, GitHub Copilot will get most of the work done right within a few seconds. Still, double-check whether all necessary keys and indexes have been created or consistency and performance might bite you later.

Rewriting / Refactoring code (e.g. Java to Kotlin)

Providing existing code as context resolves many ambiguities and paths your LLM might take when presented with less information. Since we are essentially translating from one (programming) language to another, we found that the tasks were performed reasonably well. It is also a common use-case: Think about upgrading library versions, migrating e.g. JavaScript to typescript or using existing Java code in a Kotlin project, and other scenarios.

Generating code similar to already existing code

While like the previous point, in this case we are talking about generating new code, not just changing old one. Think about your typical CRUDL use-case: You will have a few models that need to be managed by your app (users, invoices, orders, etc.) which need quite some shared functionality for creation, updates, et cetera. If you are not using scaffolding for those use-cases, mapping out one vertical domain and generating your second one with the first one plus some description of the new domain, works great and will save you some repetitive work.

Support with languages / tools one is not familiar with

A language model can act as your guiding star when dealing with languages or frameworks you are not familiar with. It generates examples which contribute to your growing understanding, it can answer questions faster and sometimes more elaborate than the documentation, and often deliver pieces of the puzzle which only need to be plugged together correctly. Nevertheless, having a sound understanding of underlying engineering basics is currently helpful if not essential: it prevents you from going down the wrong paths which lead to lost time, bad design decisions, and deteriorating software quality.

Rubber duck debugging

Most developers will know: As soon as you start explaining your problem to someone else (maybe your always present rubber duck companion) and are carefully going through the problem step by step, you will often come up with the answer yourself. This technique is called rubber duck debugging (or rubberducking) and coincidentally (or not, maybe we are talking about intelligence after all) is an established technique for querying Large Language Models about more complex problems: Chain-of-thought. Essentially by dividing your problem and solution in multiple steps and revisiting each one, you make a positive outcome more likely – for the Language Model and yourself.

Hit-or-Miss scenarios

Now for a few words about scenarios which are a bit of a gamble: You might be satisfied with the output, or you might lose some time.

Inline Code Completion

This one might be temporary and in part a usability issue, but here are a few things we observed:

- AI Assistance completions might get in the way with your IDE completions

- For some developers, the default settings and shortcuts required a change of their established workflows, which some are unwilling to do without huge incentives

- IDE completions are snappy, and these we are used to. AI Assisted completions need more time: They have a round trip to the server and significantly higher compute time. For some people this is hard. of suggestions were accepted by our developers. This is without considering if they were kept, changed, or removed afterwards, which means the final amount of AI generated code is most definitely lower. This leads to a scenario where you actively slow yourself down just to be disappointed by the suggestion and would have been faster coding the line or block yourself in the first place.

Debugging

In part a usability topic, in part not: IDE Terminal integration is a must for not copying stack traces around too much, and those tend to be pretty new and under development. But, debugging also very often requires the big picture of a sometimes very complex piece of code, and requires you – the developer – to provide the proper context (open the files), which can be really difficult when dealing with an error you know nothing about. So, for straightforward errors and stupid mistakes AI Assistance will help you, otherwise we don’t see how it can in the current state.

Negative Use-Cases

Creative tasks (Business code generation, optimized code)

At the time of writing, we are not aware of any AI tool – GitHub Copilot or others – which will self-sufficiently generate larger production-ready or even working software end to end. The limitations, bugs in AI Tooling, challenges with understanding larger context bases, and quality of the output are simply too numerous as of now. This includes generation of larger features, which may require integration through multiple layers, should follow a certain consistent architectural style, and have to integrate seamlessly into other parts of the software. Therefore, the actual implementation of our features was still done mostly by our great developers. AI helpfulness was limited to generating small chunks of code.

Cutting edge code

This one is easy: It’s not always exactly clear what versions of your used libraries your current model really knows about, because you will get conflicting answers. Therefore, if you are on the last < 6 months old worth of releases you will get wrong answers if the APIs changed, period. We understand that training takes time and resources, but if you want to stay on the cutting edge like we are, AI Assistance will hinder you quite a bit.

Tasks which require the “Big Picture” view

As already mentioned before, some scenarios like finding tough errors, or taking architectural decisions, require you to really consider a lot of context at once. And due to technical limitations (the now famous context size), it means that some models simply cannot process enough information at once to answer a query. While there are techniques around that, they are time and cost intensive. At the time of writing, GPT-4o was released one month ago which employs a context size of 128k tokens. We will explore if this allows for more reasoning about entire software systems in a future post.

Crosscheck with our CCC results

Now here’s the real deal: In our 04/2024 CCC we had a whopping 741 participants who mentioned them or their team considering the use of AI Assistance during our contest, compared to 299 who mentioned not using any AI Assistance technologies.

We see no findings which conflict with any of our earlier statements.

CCC is about getting the correct solution as fast as possible

People using AI Assistance were slower by more than 10% on average compared to coders who said they did not use any AI Assistance. This matches with our assumption that getting a fully fleshed out feature or larger solution without own corrections and skills is not yet achievable by AI alone in many cases. The coding contest is not about quality, quantity, documentation, maintainability and other factors where the efficiency gains are to be found. It’s about getting the correct solution as fast as possible, often using sophisticated algorithms. There’s room for improvement on the AI side here.

Additionally, 679 users participated in an optional post-contest survey and provided further valuable insights on their experiences with AI in general and performance during the contest for those who made use of AI Assistance during the contest. Thanks again to all who were so kind to answer. Were able to gain the following additional insights:

The race for being the go-to tool in the AI world is still open: While the current landscape is dominated by ChatGPT and GitHub Copilot, our participants mentioned using 27 different tools in the past. The most used after Microsoft / OpenAI were Google’s Gemini/Bard, Blackbox AI and Claude, followed by a couple of users of Replit, Phind, Mistral and others. There are quite a few issues to solve yet which may crown a new champion one day.

AI Assistance is powerful, but not for everyone – and changes frequently

Finally, we would like to spend the last words about subjective perceptions: Among our 287 who mentioned using AI during the contest in our post-contest AI survey, 65 answered they feel like AI lost them time, 132 say it saved them time, while 90 participants are unsure. There is no clear answer here: You would only find the truth if the same coders would repeat the same assignment (without knowing it beforehand) without using AI, or by conducting longer trials with a larger audience to smoothen out most uncertainties. And then again, with the release cadence of newer models, any results would probably be outdated before they complete. Our recommendation is this: Once in a while stop and reflect: Would you not just have been faster typing a line, even though having it automatically generated might seem more convenient? Are you keeping your coding skills sharp? Are you thinking your suggestions through, or blindly accepting what you get and calling it a day.

At the end of the day AI Assistance gives you possibly the most powerful toolset since the advent of modern IDEs and Google / Stackoverflow and others. We can truthfully say we are excited to be along for the ride from the very beginning and are eager to see where the journey may lead.

Conclusion and next steps

The outcomes of GitHub Copilot were considered positive for us. As a result, all of our project teams can currently opt-in for the usage of GitHub Copilot, provided the respective customer consents. This has legal reasons (the legal status quo being unclear) and is highly likely to be adapted in the future as things evolve.

The most concrete next step for Cloudflight is to expand use of AI Assistance to all roles: Requirements Engineering, QA and UX / UI Designers, to name a few. We are currently in a closed beta phase of providing our employees with https://github.com/huggingface/chat-ui/. “Chat-ui” is the HuggingFace Opensource UI for interacting with large language models. It is similar to what most people know and have used: The ChatGPT User Interface. We have connected this – as Microsoft Solution Partner and Specialized Partner – to a Europe based Azure OpenAI instance. Stay tuned for more updates on those efforts.

Last but not least, we are constantly evaluating changes in models with respect to cost, speed, quality, and their performance in new areas (e.g. Scaffolding) – or areas which are not the strong suit of current models, as mentioned above. We will keep you updated on any developments here.

All the best and thank you for reading.

Vinci